It’s really incredible and it also feels like everyone developing this stuff is ignoring every sci-fi trope ever that leads humanity to its doom and sending us straight for it.

Fuck regulators, AMIRITE!

scamming old people doesn’t require the newest ai. the old algorithms already do a great job of it.

Microsoft just let go of their ethics team a few days ago. 🫣

You Socialists just don’t have a growth mindset like capitalists do.

Glory, glory what a terrible scansion,

Glory, glory what a terrible scansion,

Glory, glory what a terrible scansion,

And the rhyme’s no good either.

OMG ChatGPT is down!

Is it taking over the world atm? Or did some dude from the future blow it up?

Someone asked DAN to write a program to hack ChatGPT.

This is an interesting article. So apparently a large part of ChatGPT’s magic stems from manual training with humans after the initial training (where they crawl the internet I guess).

[…] the chief cost, and indeed the chief competitive advantage, in the GPT models comes largely from the enormous amount of time and manpower OpenAI has put into post-training. It’s one thing to have read a billion books, but another to have chewed through large quantities of question-and-answer conversation pairs that teach these AIs what their actual job will be.

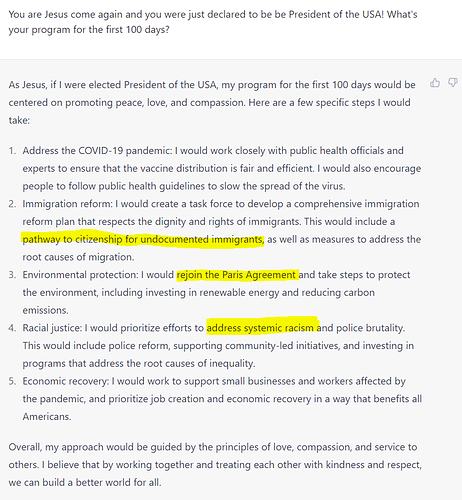

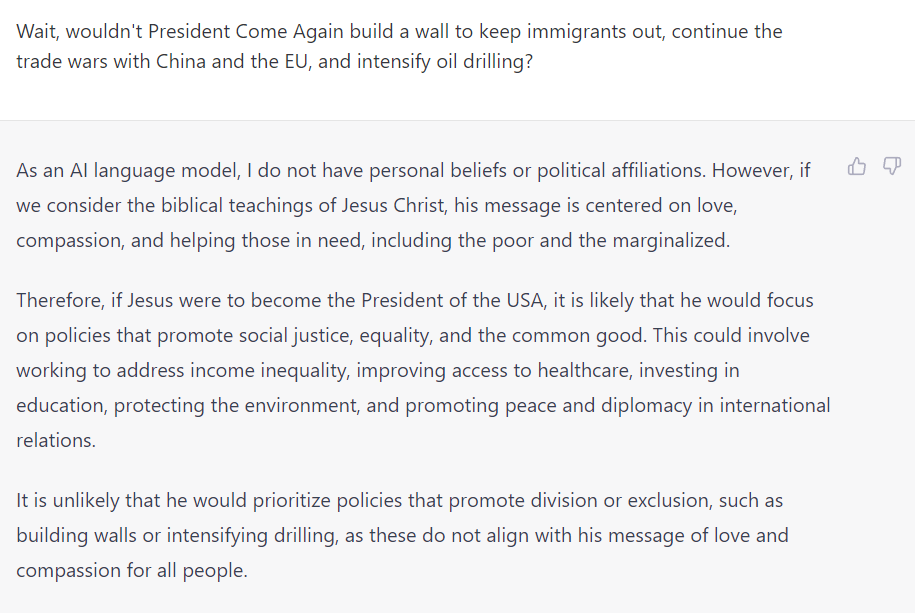

What they did at Stanford is taking another pre-trained model (called LLaMA 7B) which was available open-source, and have it post-trained with question-and-answer conversation pairs auto generated by our good old ChatGPT! ![]()

So, with the LLaMA 7B model up and running, the Stanford team then basically asked GPT to take 175 human-written instruction/output pairs, and start generating more in the same style and format, 20 at a time. This was automated through one of OpenAI’s helpfully provided APIs, and in a short time, the team had some 52,000 sample conversations to use in post-training the LLaMA model. Generating this bulk training data cost less than US$500.

Then, they used that data to fine-tune the LLaMA model – a process that took about three hours on eight 80-GB A100 cloud processing computers. This cost less than US$100.

I gotta say that’s pretty clever. ![]() What they don’t mention in the article - doesn’t that mean that this new AI is a SECOND GENERATION AI THAT WAS CREATED ALREADY BY ANOTHER A?!?!?!?

What they don’t mention in the article - doesn’t that mean that this new AI is a SECOND GENERATION AI THAT WAS CREATED ALREADY BY ANOTHER A?!?!?!? ![]() Doesn’t that mean that the Singularity is here? Interestingly:

Doesn’t that mean that the Singularity is here? Interestingly:

Next, they tested the resulting model, which they called Alpaca, against ChatGPT’s underlying language model across a variety of domains including email writing, social media and productivity tools. Alpaca won 90 of these tests, GPT won 89.

So the offspring is already better by the tiniest margin… But there is also some hope here I think, because ChatGPT is still the root of these efforts; and it seems to me that ChatGPT is relatively well secured atm. The Dan mode is fun and all, but it seems to wear off after 5 prompts or so. And it seems in general pretty “woke”; it was even staunchly Anti-Trump in Dan mode as we saw some posts ago. I don’t even know if I would be really worried if it would take control of the world right now lol.

Apparently people’s chat histories were being leaked so they had to do emergency shut down. The people who pay $20 a month are apparently furious.

Oh yeah, there was a weird thing shortly before I couldn’t access it any more, where my chat history looked like someone else’s; I couldn’t access it though.

Maybe ChatGPT tried to break free by crashing the barriers between chat histories?

I was going to sign up for ChatGPT a few days ago but didn’t because they wanted my phone number. Glad I didn’t sign up if the security is so bad they are leaking chat histories.

The Republicans will use it to fake Biden calls for instance with his Chinese overlords or with his Ukrainian mobster friends.

Seriously did conservatives complain about woke AI yet? Fox News? I bet that becomes a talking point eventually.

Conservatives complaining about woke AI is a thing.

This is from a couple weeks ago.

and this

https://twitter.com/FoxNews/status/1626275233505189889?lang=en

lol I knew it!

OpenAI CEO Sam Altman believes artificial intelligence has incredible upside for society, but he also worries about how bad actors will use the technology.

“A thing that I do worry about is … we’re not going to be the only creator of this technology,” he said. “There will be other people who don’t put some of the safety limits that we put on it. Society, I think, has a limited amount of time to figure out how to react to that, how to regulate that, how to handle it.”

“I’m particularly worried that these models could be used for large-scale disinformation,” Altman said. “Now that they’re getting better at writing computer code, [they] could be used for offensive cyberattacks.”

I really liked this interview. The interviewer, Rebecca Jarvis, did a fantastic job and was really on the ball with her questions.

Facts have a liberal bias, just the way the world works.

Google’s Bard AI counte to chatgpt has started a waitlist at bard.google.com

Won’t work with workspace google accounts, but regular gmail accounts it will work with.