ChatGPT not exactly kicking ass here. I guess the word I was looking for, grossfeelich, is a little obscure. But I found it in enough places that I think it’s good.

GPT-3.5 is the LLM used by the Amish, but it’s not the best for questions about the Amish. Use GPT-4, which is free in Copilot.

I randomly started using ChatGPT for the first time yesterday.

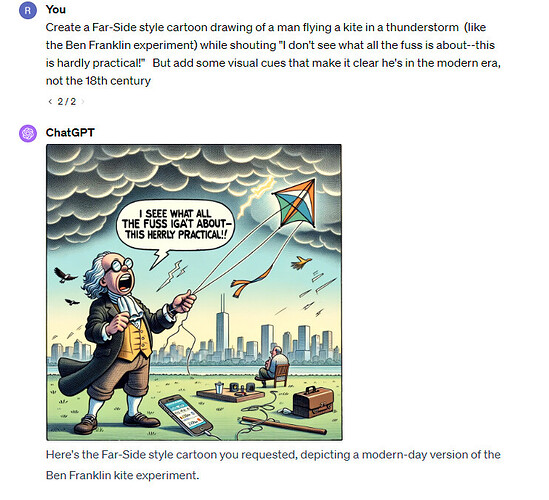

Can’t write screenplays for shit.

I went to a Second City show and they did a skit that was really weird, crazy, and hard to follow for about 90 seconds before one of them said “this was poorly written, whose idea was this?”

“It’s the new AI generated sketch”

Ran a training session for my team (old sales guys) on how to use chatGPT. Huge success. Minds were blown.

Did a whole screen share thing

Also, I didn’t think much about the fact that it saves old conversation history and lists it on the screen next to your chat.

So.

Did everyone know that the Latvian translation for “sharp knife” is “Ass Nazis”? ChatGPT helped me with that a few week ago

My whole sales team knows it now…

AI hedging, lying, excuses, apologies, and excess verbiage are annoying AF.

You have to prompt it around its guardrails and make the situation you are asking about is hypothetical. That is how I have seen it give more accurate answers when it was talking in circles.

As part of doing my master’s, I’m doing a subject on construction law. It’s the first time I’ve had to learn much about how the law operates.

Interesting stuff. But I’m also concluding. Oh boy. Lawyers are so fucked. Fucked like translators are fucked. Fuckedy fucked.

Huge amounts of the law seems to be about reading and understanding the relationships between concepts and words, requiring exceptional breadth, and the ability to turn that into customised arguments for a specific case.

All things with ChatGPT is pretty good at now, and has a clear path to being very very good at in a year or so.

Interested if any lawyers have a different view?

Chat GPT is not good as “understanding the relationships between concepts and words, requiring exceptional breadth, and the ability to turn that into customized arguments for a specific case” and it lies and hallucinates constantly.

If AI can eventually take over legal work it will also be able to take over things like science, philosophy, and government policy. I’m hoping for that to happen, because human beings are stupid, but I expect it will take at least 100 years to get rolling.

- The hallucination issue is going to be a huge barrier to completely replacing attorneys.

I (and probably every attorney I know) would love a tool that could respond to prompts like, “what are all of the elements of equitable estoppel and how do they apply to the case file I just uploaded” or “using current state and federal tax law, design a financial plan for this family that minimizes taxes. How much, if any, additional money could be saved if the family relocates from San Francisco to Austin?” But, if ChatGPT is running around citing fake cases, I’ll keep doing the work myself. And, more importantly, clients and malpractice providers are still going to want me to do the work in most situations.

- Don’t underestimate the ability of the legal industry to slow walk innovation and change. While clients and some attorneys may push for quick adoption as soon as a minimum standard is met, the legal industry as a whole is going to want to keep lawyers in jobs, so I expect that we’ll do a lot of lobbying for rules that slow change (just like we did with things like legalzoom or the way tax preparers have tried to stay involved in tax preparation even as technology should have allowed for an easier and more efficient process)

Bottom line is that I know the computer will eventually take my job, but the technology will probably be able to outperform me quite a few years before it’s actually allowed to do IRL.

Pretty sure lawyers lie and hallucinate a lot too. I’m also pretty sure ChatGPT will take less than 100 years to sort that out.

ChatGPT when accused of dishonest lawyering

I agree with the 100 years part. That timeframe seems way too long. As far as the lying and hallucinations, I see that discussion evolving similarly to the way we talk about self driving cars. I have no doubt that, particularly under certain conditions, self-driving cars can perform better than many human drivers. But, everytime a Tesla crashes while in autonomous mode, it’s a major news story and the timeline for full adoption gets pushed back. People would rather keep their hands on the wheel, even if it means more accidents. And I think it a weird subconscious way we’d rather be lied to by another human than a computer.

I don’t think GPT-4 will do it, but I’m quite confident the next OpenAI model will be capable of writing a merger agreement better than a mid-level BigLaw associate.

Research and analysis are harder, although I’d be curious to see what a dedicated RAG setup can do with an unhandcuffed GPT-5.