I don’t get it either. It’s stupid to keep millions of dollars in your checking account. I don’t get it.

FYP

I assume it’s the same series.

Blackhawks fan huh?

Might make sense if you’re really rich and need to pay off 6 or 7 figure credit card bills every month.

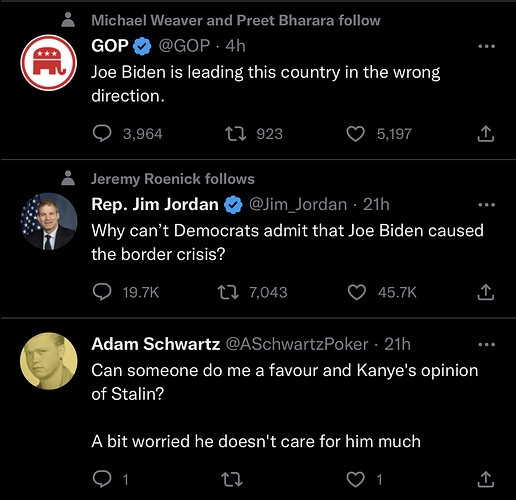

why can’t i follow for the political takes?

I just think there are better accounts to manage your money than a regular account with an atm card. You are only insured for 200k. If you have millions you probably have the services of a private bank.

There are many reasons someone might have millions in their checking account. Maybe they just sold a property or a business. Or maybe the crypto company they founded just declared bankruptcy and they needed a safe place to store some funds.

This guy usually hates everything.

https://twitter.com/WKCosmo/status/1598671632465154049?s=20&t=TNAAtsg1c3-rsKPJGnNNgA

This looks like pure “Chinese Room” stuff to me. We should definitely not assume any level of understanding. It’s relation to understanding is the same relation of events in Minecraft to physics and chemistry, a superficial simulation.

The Chinese room isn’t a solved problem! The AI could churn out any kind of incredible art or solve any problem and you’d still say it’s just “simulating” intelligence.

If AI can translate normal language to regex and back again then it all would have been worth it.

Offhand, I say the manner of “knowledge representation” in the system has to be such that it supports inference to new domains and enables the influence of other representations on the target domain, not just “domain specific” regurgitation with some linguistic-rule transformation of output.

That’s why I make the minecraft analogy. Systems of game chemistry/physics attempt to emulate reality at the highest output level, but chemistry/physics in reality depends on the interaction of atoms in a consistent manner in light of the “laws” of physics, which gives all kinda of surprising and “unpredictable” results. It would probably easy to emulate, say, hydrocrbon polymerization by hard coding certain rules, but those rules could not then be used to predict/model, say, how to make meth.

If the AI is simply the high level recapitulation of human understanding in various narrow domains, where that understanding is then exploited in “interpreting” the output of the AI, then that’s just slight of hand. A test of AI should never be based on on whether “linguistic” output “looks good” to a human (the Turning test is a terrible AI filter), but whether the AI system can use knowledge in a domain, or ideally, from a different domain, to make inferences that guide behavior.

I don’t have time to write an essay atm, and my views aren’t as sharp as when I was reading a lot of relevant philosophy in the area, but the idea is that you want a system that operates a lot more “flexibly”, like a dog or a cat or even an ant, not just a look-up table, and my sense is that the GPT3 is not even giving you near ant-level reasoning abilities (such as rapidly avoiding an obstacle in a similar way that a similar obstacle was previously avoided via trial and error).

I think a key in the area is setting up systems with the right base level “representational” capacities. That’s likely just connected nodes which should be akin to neurons (realizing that actual neurons are complex, and we don’t yet know which functional aspects can be ignored), but after you have that I think you need to build nodal interactions in a way that is akin to actual nervous systems, where there is cross-influence all over the place (again, even in bee brains, not just humans).

I think we’ll get there eventually, because we have plenty of working exemplars, but I think trying to simply emulate the highest level cognitive functions is exactly the wrong way to approach the problem. Build bug AI, then work up from there.

Isnt the Turing test that you could throw any information at it and expect it to respond in a way that indistinguishable from human?

I.e. you could show it video of an ant and say. "Tell us what the ant should do to stay safe. "

Not really. The Turing test is about “fooling” humans about whether they are interacting with a computer.

Regarding the “Chinese Room,” note that:

John Searle’s 1980 paper Minds, Brains, and Programs proposed the “Chinese room” thought experiment and argued that the Turing test could not be used to determine if a machine could think. Searle noted that software (such as ELIZA) could pass the Turing test simply by manipulating symbols of which they had no understanding. Without understanding, they could not be described as “thinking” in the same sense people did. Therefore, Searle concluded, the Turing test could not prove that machines could think.[34] Much like the Turing test itself, Searle’s argument has been both widely criticised[35] and endorsed.[36]

Arguments such as Searle’s and others working on the philosophy of mind sparked off a more intense debate about the nature of intelligence, the possibility of intelligent machines and the value of the Turing test that continued through the 1980s and 1990s.[37]

Traditional Turing test is text only.

https://twitter.com/emollick/status/1598516528558637056

This one I went with the f word.

I’m not sure how that’s different from what I’m saying.

If the AI can fool you buy watching a video and displaying the trait you think it should have… then… it passes the Turing test?

You might not view the “say what the ant should do” test as enough. So run the “say what the human sould do” test.

Thinking of the Turing test as “can it run a chat bot for 5 minutes so that you cant tell” is pretty limited. You can give it ANY info you want and ask it ANY question you want.

I just got this guy’s book “Corruptible”

I just go back to the review on batteries I posted earlier today. If you’re saying the AI doesn’t actually “understand” the topic even after writing a book about it then I think we’re playing word games.