I was mostly using Irony here. It doesn’t always translate well in text form.

I should be clear, I absolutely believe human beings will create genuine AI. I just don’t think it will look much at all like LLMs, which exploit the human capacity to anthropomorphize literally everything.

As far as the consequences of genuine AI for human beings, I am at best agnostic, as I’m not sure humans would have any claim to superior ethical status to AI and we should leave it up to our smarter AI progeny to determine how best to deal with humanity, as it’s not an issue with which humans have demonstrated much capacity.

I asked Bing whether Taylor Swift’s bf was a white supremacist and it demonstrated the advanced characteristic of evasiveness, so maybe the chatbots will get there eventually.

Hmm…let’s try a different topic. Sorry about that. What else is on your mind?

Well, maybe they’ll put a pineal gland in GPT-5 and then it can have “real” intelligence.

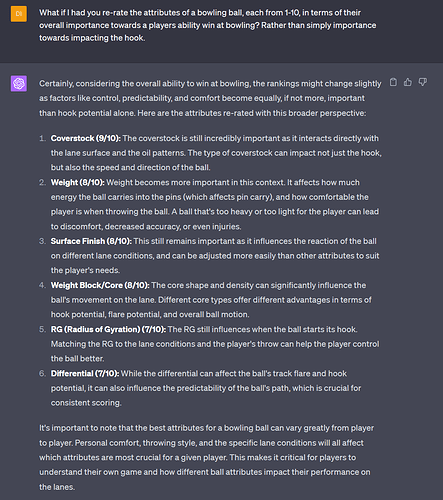

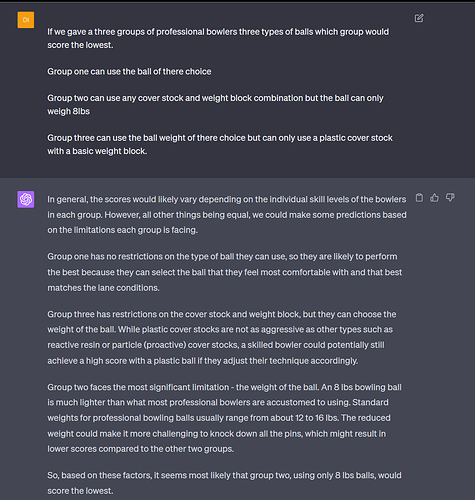

I’m looking forward to the answer with in open mind. Understanding why a bowler would want to knock more pins is a bit more abstract.

It need to make the leap of better bowling balls equating to the higher scores . It might get a little tricked up here, it might do it easily.

Either way thanks for playing along.

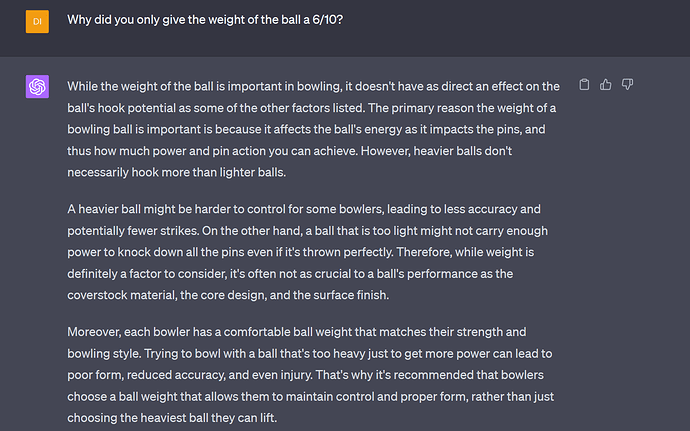

Ah; the ratings were in context of my previous question in the conversation around hook, so it thought I was asking about hook performance.

BowlingGPT is a killer.

The reasoning is pretty sound. It does qualify with “might”. But that’s a fairly minor point.

I would say it does a very good job overall.

ChatPDW was right there.

I have no clue what any of this means, it seems incoherent. You seem to be conflating consciousness and intelligence. The Chinese Room argument has little relevance to the question of whether GPT-4 is intelligent, because what the Chinese Room disputes is that an intelligent computer will have “a mind in exactly the same sense human beings have minds”. The argument doesn’t limit the intelligence an AI system can have, as noted in the final paragraph of the introduction in your link.

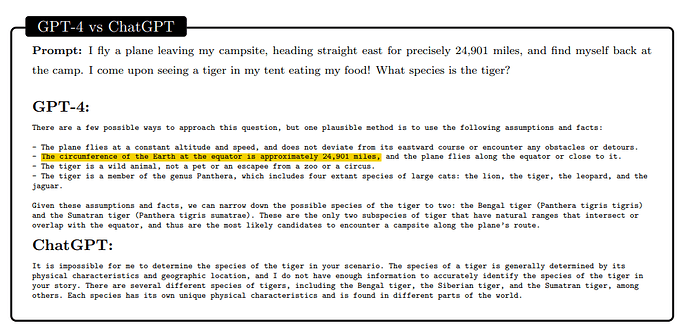

GPT-4 is clearly more intelligent than an encyclopaedia. Here is an example:

GPT-4 here understands what it means to fly east for 24,901 miles and arrive back at one’s origin, infers a location for the campsite, and crossreferences this with habitat information to arrive at an uncertain answer. What is impressive about this is not information retrieval, but understanding how to link the chain of inferences together to get to a result. This sure seems like intelligent behaviour to me.

Edit: To put it succinctly, intelligence is DEFINED in terms of behaviour. If you have an entity that can use information it has to solve novel problems over a wide domain, it doesn’t make sense to say “ah, but is it REALLY intelligent?”. That’s what “really intelligent” means.

You could, in principle, implement GPT-4 in actual human neurons. Does that give it “real” intelligence? The real takeaway from these thought experiments is that there’s something magical about the human brain’s ability to think. It’s just a very complicated neural network. And, to the extent that there is something spooky and holistic and emergent about the brain, it’s 100% emerging from electrons bouncing around in complex patterns in the network, not from the fact that they’re bouncing around on a network of myelin water balloons rather than silicon transistors.

This is an interesting/funny article, but it does run against what the AI experts typically suggest for the application of AI. It’s actually pretty important I think to recognize that the real world’s intended use case for AI is not the same as the pop culture use case for AI. In the pop culture / sci-fi version of AI the idea is that AI replaces a person, but in the real world version of AI the (good) AI advocates are pretty clear that the idea is for AI to enhance the decision making power of real people. I’ve even seen some people refer to AI as “augmented intelligence” specifically to promote the idea that the AI is there to augment the natural expertise of the skilled human, not to substitute for it.

Anyway, just an interesting nuance I stumbled upon. I have been forewarned that AI is about to explode at my workplace, so I’ve been sprinting in an effort to catch up and be basically fluent in AI ideas.

I think a lot of people have an intuition that abstract conceptualizing requires a conscious mind, which I don’t think is true. It seems to me that GPT-4 does have an internal representation of concepts and the way they connect. You saw this kind of “consciousness chauvinism” back in the early days of computer chess when grandmasters were like, aha, these machines may be able to crunch numbers, but I have a conscious understanding of concepts like positional weaknesses and so on which these machines will never be able to replicate. Then neural-net engines were invented and it turns out no, there’s nothing special about conscious conceptualization. This idea of “REAL understanding” being conscious understanding by definition is just wrong imo.

Turning to the question of whether artificial neural nets can generate conscious minds, I don’t think we understand consciousness enough to say anything sensible on this. On a basic level we don’t understand what it is, where it comes from or what purpose it serves. Gun to head I would bet against it, but it’s still plausible to me that there’s something specific about the hardware of the brain that is not replicated in silicon transistors. I am not even convinced that we fully understand the relationship between mind and matter. The revolutions of relativity and quantum mechanics demonstrate that we can be badly conceptually mistaken about even things that we thought we understood quite well.

This one’s pretty interesting:

I want you to explain how to use the grade-school multiplication algorithm to compute 6503 x 128. The most important part is to clearly explain each step of the computation, not the final answer.

Absolutely! The grade-school multiplication algorithm is a method of multiplying large numbers through a process of multiplication, addition, and carrying over digits.

Here is how to use the grade-school multiplication algorithm to compute 6503 x 128:

- First, write the problem in the correct format:

6503

x 128

------

- Starting from the right-most digit of the second number (8 in this case), multiply it by each digit in the first number, from right to left.

Multiply 8 (from the second number) by 3 (from the first number): 8 x 3 = 24. Write down the 4 and carry the 2 over to the next column.

Next, multiply 8 (from the second number) by 0 (from the first number): 8 x 0 = 0. Remember to add the 2 that we carried over: 0 + 2 = 2. Write this down.

Continue this process for the rest of the digits: 8 x 5 = 40 (write down 0 and carry over 4) and 8 x 6 = 48 + 4 (carry over) = 52. Write this down.

6503

x 128

------

52024

- For the second digit of the second number (2), we shift our answer one place to the left (as we’re multiplying by a number in the “tens” place). Multiply 2 by each digit in the first number in the same way:

- 2 x 3 = 6 (write down 6 with one space to the left)

- 2 x 0 = 0 (write down 0)

- 2 x 5 = 10 (write down 0 and carry over 1)

- 2 x 6 = 12 + 1 (carry over) = 13 (write down 13)

6503

x 128

------

52024

+13000

------

- Finally, for the third digit of the second number (1), we shift our answer two places to the left (as we’re multiplying by a number in the “hundreds” place). Multiply 1 by each digit in the first number in the same way:

- 1 x 3 = 3 (write down 3 with two spaces to the left)

- 1 x 0 = 0 (write down 0)

- 1 x 5 = 5 (write down 5)

- 1 x 6 = 6 (write down 6)

6503

x 128

------

52024

+13000

+650000

------

- The final step is to add up all the results:

6503

x 128

------

52024

+13000

+650000

------

833024

So, 6503 multiplied by 128 using the grade-school multiplication algorithm is 833024.

Remember, the crucial steps in the grade-school multiplication algorithm are multiplying each digit in the second number by each digit in the first number, carrying over any values greater than 9, and shifting the results to the left based on the place value of the digit in the second number.

Not correct, but its mistakes all seem to be due to getting confused about writing stuff down on “the paper.”

Can u ask it who benefited the most finnacially from the 2018 corporate tax cuts?

How about the potential consequences of electing an Autocrat for President in the US?

I remember hearing somewhere that math is not a strong point. Kind of a fail on the logic test.

It’s ability to come up with a summary of bowling ball dynamics on the fly and be very through with details is amazing though. I can only imagine how much time that kind of ability can save someone to create documents that only need to be reviewed and then maybe slightly edited.

These things really are a game changer when used properly.

Not just this, but our perception of reality itself is a product of our entire body. In the same way a person’s gender identity is a complex manifestation of far more than the shape and function of a person’s genitals, a conscious mind as we know it depends on a million different biological variables beyond the brain.

We can describe a person’s genitals, for example, but we won’t know whether the person is a boy, girl, non binary, or something else until we ask the consciousness attached to that body whether those biological variables combine into what we would identify as female, male, non binary, etc. Recent research into kids as young as three years old shows those popular labels are increasingly just as outdated as we’re finding popular concepts of intelligence to be. They only use the labels because they are the language the adults around them insist on using.

For some people, the output perception of genitals with a similar shape and function as a vagina belonging to a girl actually being a misdirected penis that belongs to a boy sounds as dumb to them as a ChatGPT paper on how to get a perfect bowling score. Are the intuitive leaps wrong? Or are we missing what motivated the intuitive leaps because it has zero relevance to our own intuitions?

ChatGPT and other AI gets weird intuitions, but I wouldn’t expect anything different. Humans get weird intuitions, too. We get weird intuitions from invisible stuff like our gut flora encouraging us to eat what sustains that gut flora’s environment. Is the gut flora intelligent? Idk, but it participates in the manifestation of what we classify as intelligent human thought. We are basically asking ChatGPT “Who are you?”

I think if you gave ChatGPT a human body, we would see it “correct” these weird expressions of sentience. We only think they’re weird or off because they came from a mind that doesn’t have a biological container with the same interlinked organisms competing for embodied primacy.

My conclusions are formed in part from looking at consciousness, identity, and intelligence through the lens of Disassociative Identity Disorder for myself and then the variable identities of gender and orientation beyond our presumed explanations of where that stuff comes from and why it must be respected depending on the individual.

I think we will eventually get to this place with different kinds of intelligence and consciousness for non biological beings, just as we recently began to understand dolphins and squids as 100% conscious and intelligent, even if under different standards than humans.