https://medium.com/@enryu9000/gpt4-and-coding-problems-8fbf04fa8134

I’m pretty sure that humanity’s demand for good code is essentially unlimited at the right price. We are gonna get some sweet video games in 10 years.

I don’t see ChatGPT & Co. outright replacing programmers, because I don’t think that AIs can come up with original new algorithms. And even if it codes better than it does now, for stuff like safety critical applications you will always need someone to have a look at it. But it can help a lot with the boilerplate stuff, and with it being used as a productivity booster, we might even see the return of the single programmer coding big SW projects alone.

Imagine going back in time to mid-2022 and showing this to people. Their minds would be completely blown!

https://twitter.com/thestalwart/status/1640326234495131656?s=46&t=9xanL2tZoKj22erGoTuL4A

Imagine if we lived in a society where jobs getting replaced by technology/AI/whatever was seen as a good thing.

I think this is true across a wide range of applications, not just programmers. AI is tailor made to replace the most repetitive, tedious aspects of white collar work from data entry to basic Microsoft Office work. These things are also the least enjoyable parts of work, but I can see why people are so resistant to the change. Not everyone wants to be passionate about work, if you can pay your bills just for doing tedious, repetitive spreadsheet work then a lot of people are going to be happy with that.

This tech in conjunction with AI is kind of a wildcard imo. This might stay on a level where it recognizes simple commands, but imagine if it could start writing the code you think about, or if you would coarse-grain dictate a text in your mind where the AI fills in the gaps. And then you inspect the result and just think about what should be changed/corrected which is then instantly implemented by the AI. Or you just record what you thought of all day, let the AI Joyce it up and go get your Booker prize! ![]()

Right now chatgpt struggles around things to do basic arithmetic because of the way it works.

Like if you ask 3/3.5 if you have 10 books and read 2 how many books do you have. It will often say 8.

It can struggle to name a five letter word that is the opposite of start, instead offering three, four and six letter words.

Inspired by a photoshop in trump thread I asked Bing

“Draw me a giant marshmallow man with the head of Donald trump”

It refused to do it as I used a blocked word. I changed trump to ‘45th president’ and it did this:

That it can get any complex task done the way it works is really crazy if you think about it. As I understand it, what ChatGPT does is really just coming up with the next word over and over again. It’s basically like in this Rick and Morty scene:

When you ask humans something difficult they usually think before they speak. To do something like coding they build mental models and stuff like diagrams before they start to write code. ChatGPT does none of that, it’s just chatting away.

Yeah it is crazy that it is just predicting the next token and getting to the places it does. Makes my head melt.

This isn’t true though. GPT forms mental representations of a program it intends to write before starting to code it. To say that all GPT is learning is token prediction is wrong in exactly the same way it’s wrong to say that all you learn in a high school class is how to fill in bubbles on the Scantron test. What GPT is learning is ~all the knowledge in the training set~, and it measures how good of a job it’s doing by seeing how well it can fill in the blanks in random bits of text. We give humans fill-in-the-blank tests to measure learning too!

The right baseline assumption is that LLMs think in qualitatively the same way as humans, they just have different strengths and weaknesses because of their architecture.

Interested in hearing more about this.

“You just figure out what the next word should be and then write it” is a description of how everyone, GPT or human, completes something like the bar exam. The trick is how you figure out what the next word should be. And the answer to that, for both GPT and humans, is forming representations of meaning and the connections between things that enable you to comprehend sentences and form new sentences in response.

GPT is very good at digesting knowledge and being able to regurgitate that knowledge, re-forged into entirely new semantic constructs. This makes it great at sitting exams. I think to some extent this suggests it is poised to replace humans at some jobs, but I think to a greater extent, it highlights the shortcomings of exams as a measure of whether people are actually prepared to do a job.

btw, I think it’s interesting to consider how little we actually author the words we speak. When I am having a conversation with someone about some subject I haven’t really thought about much before, I open my mouth and words flow out. I don’t think at all about what those words are going to be beforehand. The acts of speaking and thinking are simultaneous, to the extent I’m conscious of any thinking at all. It’s actually quite disconcerting if you really pay attention to it, as it puts the lie to the idea that what I think of as “me” is really in control of very much at all.

Back in my misspent youth, I remember having conversations with friends on MDMA, and things came out of my mouth about myself which I instantly recognised as true, but which I had no conscious realization of before saying them. It’s instant therapy - which is, of course, what MDMA is under study for now. It’s an interesting experience.

You’ve basically landed on non-duality. “This” is all just the universe getting to know itself.

And this is what made the “mental representations” comment interesting to me. Can we see the mental representations AI creates and uses? Can it be forced to show its work?

We humans don’t really control anything. Try to control your thoughts and it becomes obvious, quick, that “you” as we typically conceive of ourselves aren’t actually thinking them.

Yeah the answer to this is definitely no. What is a bit worrying to me about these LLMs is not that I think they’re genuinely conscious Skynet candidates - they are definitely not - but that nobody has the faintest idea how far along the road they are to something like that. Nobody really has any clue how they work. “They just predict the next word” is a description of what they do, not how they do it in such an accomplished way.

Maybe closer to skynet than we realize then, if we know as much about how AI works as we do about how we ourselves think.

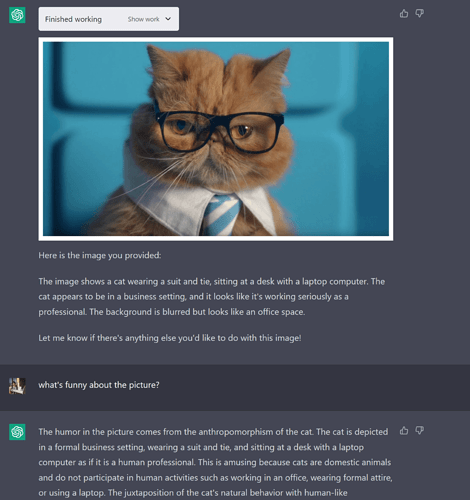

Chatgpt4 can now accept pictures and describe them.

Stolen from Reddit:

Could this trivialize those picture captchas?

Browsing mode has been added for paid users I believe. Seems to have some expanded potential. (Like Bing but maybe more so/different).

People been using Bard to summarize and rewrite paywalled articles.